Color Space ,Transform, Threshold

Mat green=Mat(1,1,CV_8UC,Scalar(0,255,0)),hsv_green;

cv::cvtColor(green,hsv_green,cv::COLOR_BGR2HSV);

vec<uint8_t,3> value=hsv_green.at<vec<uint8_t,3>>(0,0);Now you take [H-10, 100,100] and [H+10, 255, 255] as the lower bound and upper bound respectively.

Object Color Tracking by inRange

# Take each frame

_, frame = cap.read()

# Convert BGR to HSV

hsv = cv.cvtColor(frame, cv.COLOR_BGR2HSV)

# define range of blue color in HSV

lower_blue = np.array([110,50,50])

upper_blue = np.array([130,255,255])

# Threshold the HSV image to get only blue colors

mask = cv.inRange(hsv, lower_blue, upper_blue)

# Bitwise-AND mask and original image

res = cv.bitwise_and(frame,frame, mask= mask)Transformations

OpenCV provides two transformation functions, warpAffine and warpPerspective, with which you can perform all kinds of transformations. warpAffine takes a 2×3 transformation matrix while warpPerspective takes a 3×3 transformation matrix as input.

resize Imgae

Scaling is just resizing of the image. OpenCV comes with a function resize() for this purpose. The size of the image can be specified manually, or you can specify the scaling factor. Different interpolation methods are used. Preferable interpolation methods are INTER_AREA for shrinking and INTER_CUBIC (slow) & INTER_LINEAR for zooming. By default, the interpolation method INTER_LINEAR is used for all resizing purposes. You can resize an input image with either of following methods:

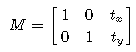

resize(LoadedImage, LoadedImage, Size(100, 100));Tanslate

warpAffine( src, warp_dst, warp_mat, warp_dst.size() );

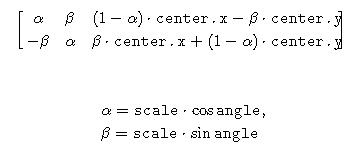

Rotation

Mat cv::getRotationMatrix2D ( Point2f center,

double angle,

double scale

) M = getRotationMatrix2D(((cols-1)/2.0,(rows-1)/2.0),90,1)

warpAffine(img,dest,M,(cols,rows))

Affine Transformation

In affine transformation, all parallel lines in the original image will still be parallel in the output image. To find the transformation matrix, we need three points from the input image and their corresponding locations in the output image. Then getAffineTransform will create a 2×3 matrix which is to be passed to warpAffine.

Mat cv::getAffineTransform ( const Point2f src[],

const Point2f dst[]

)

Parameters

src Coordinates of triangle vertices in the source image.

dst Coordinates of the corresponding triangle vertices in the destination image.

Perspective Transformation

For perspective transformation, you need a 3×3 transformation matrix. Straight lines will remain straight even after the transformation. To find this transformation matrix, you need 4 points on the input image and corresponding points on the output image. Among these 4 points, 3 of them should not be collinear. Then the transformation matrix can be found by the function getPerspectiveTransform. Then apply warpPerspective with this 3×3 transformation matrix.

Mat cv::getPerspectiveTransform ( InputArray src,

InputArray dst,

int solveMethod = DECOMP_LU

)

pts1 = np.float32([[56,65],[368,52],[28,387],[389,390]])

pts2 = np.float32([[0,0],[300,0],[0,300],[300,300]])

Mat M = getPerspectiveTransform(pts1,pts2)

warpPerspective(img,dst,M,(300,300))